Introduction

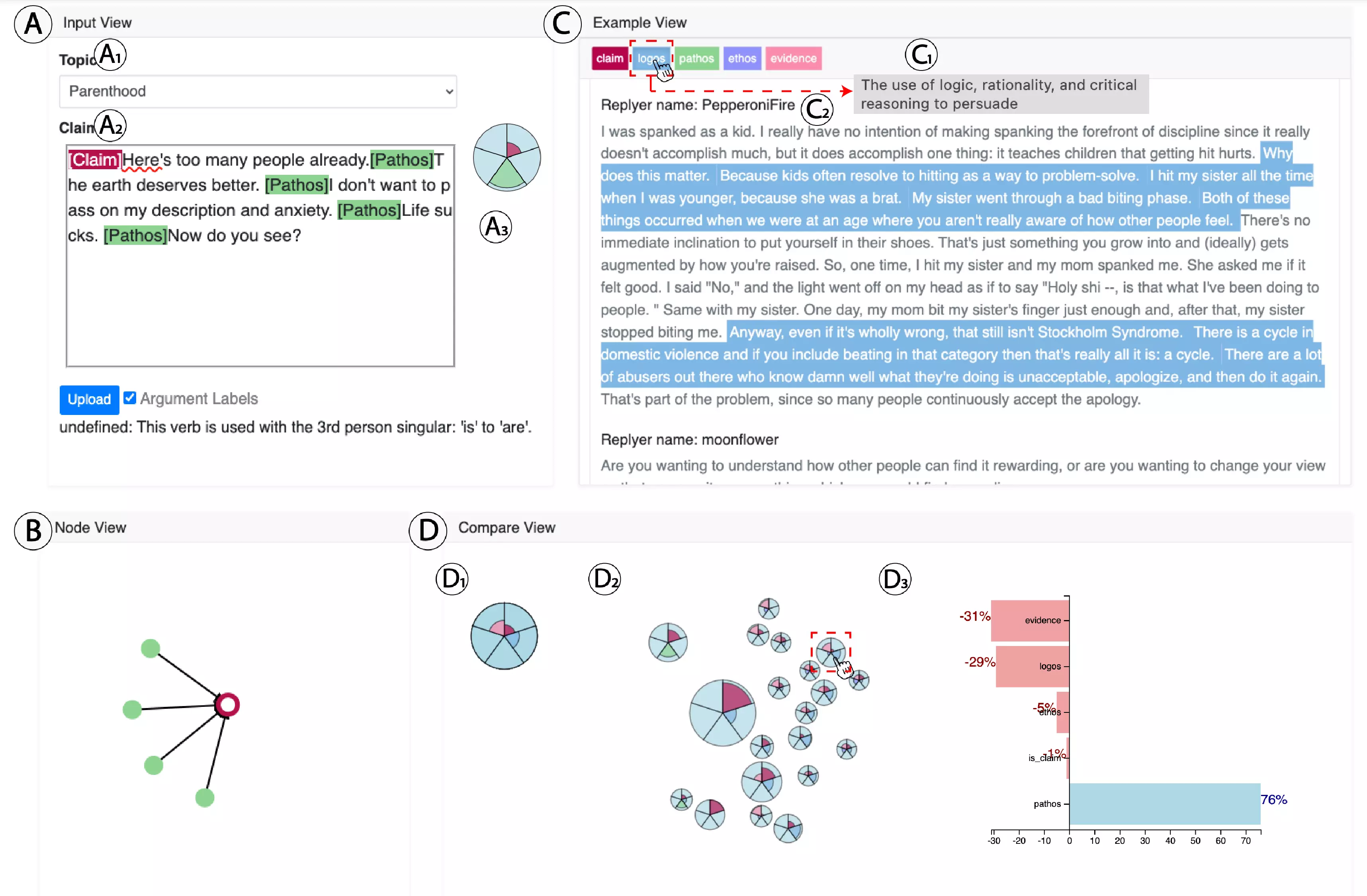

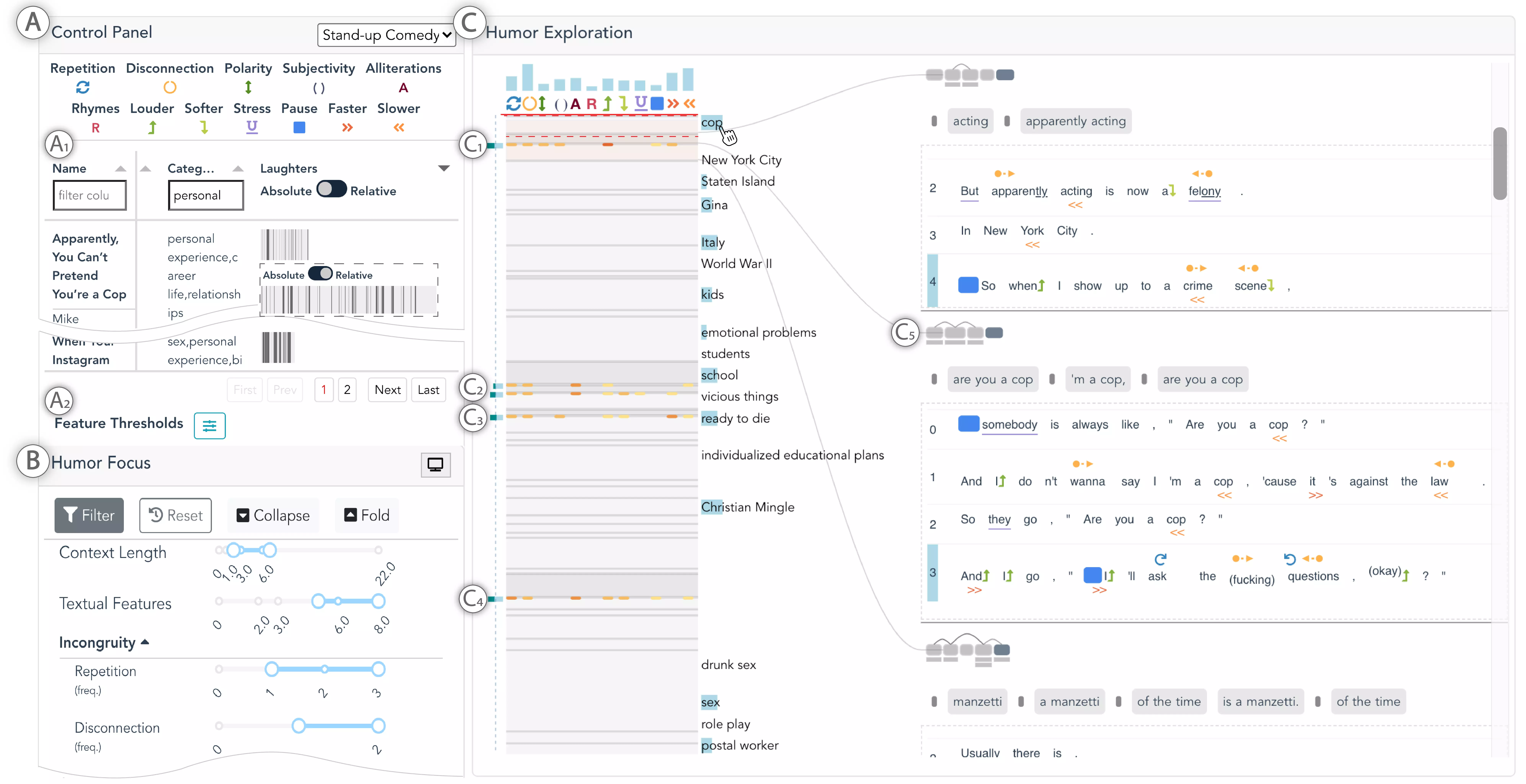

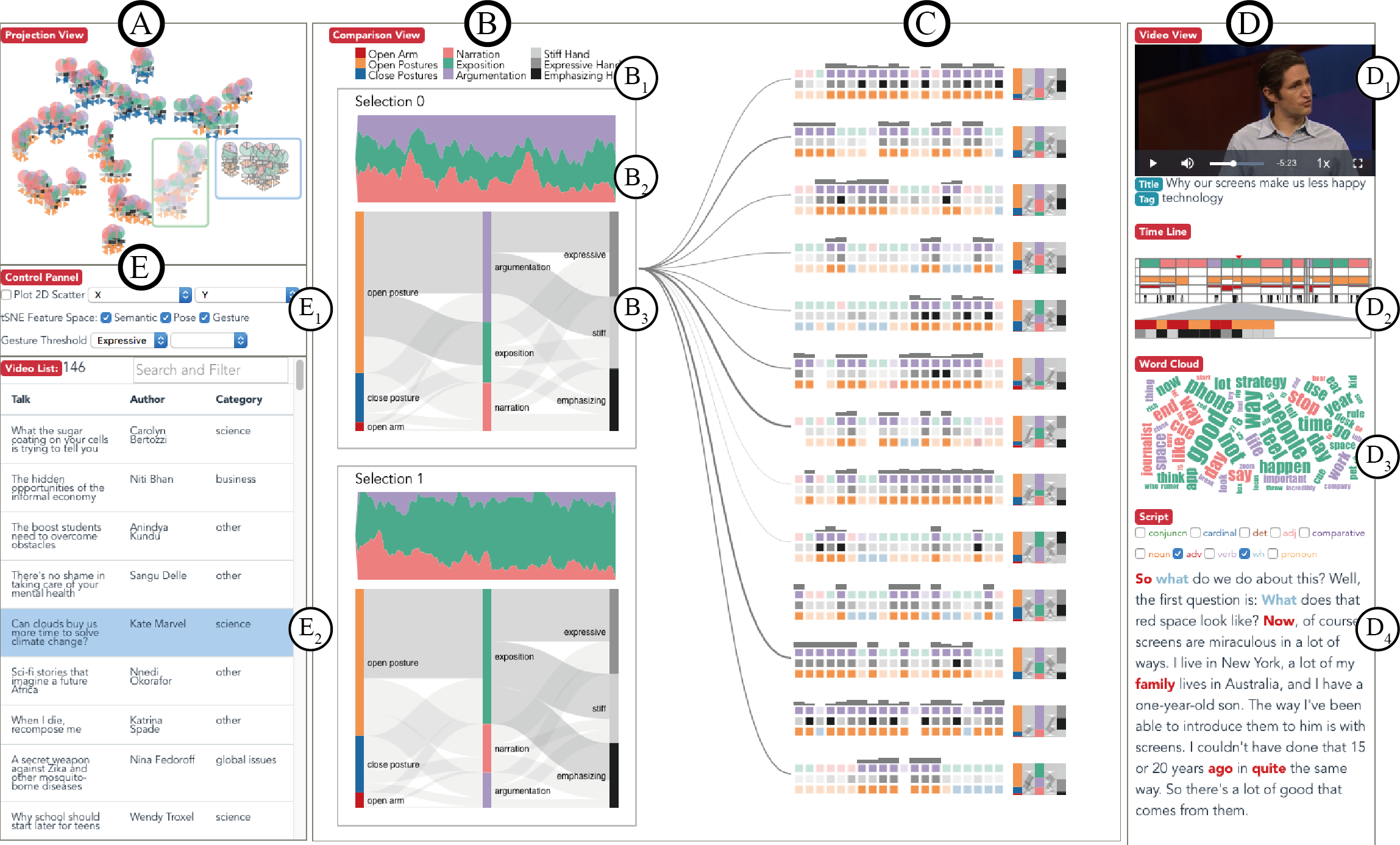

Human communication is naturely based on dynamic information exchange of the multiple communication channels, such as facial expressions, prosody, speech content, and body language. The multimodal data generated during communication have brought new inspirations to intelligence education and smart training services, especially for classroom scenarios and presentation training scenarios. Processing vast amounts of information into knowledge and improving data utilization capabilities have become opportunities for these new inspirations. Visualization has a direct and close connection with knowledge expression and is an important means of interpreting complex data.

We are MMComVis group of HKUST VisLab. To help people have a deeper understanding of human communication behavior and performance, we have explored this study for years and published many related work in this field. Besides, we build some useful techniques for human communication training. We aim to harness the power of visual analytics to effectively utilize and explore multimodal data generated during communication. If you have interests in our works, please feel free to contact us.